Introduction

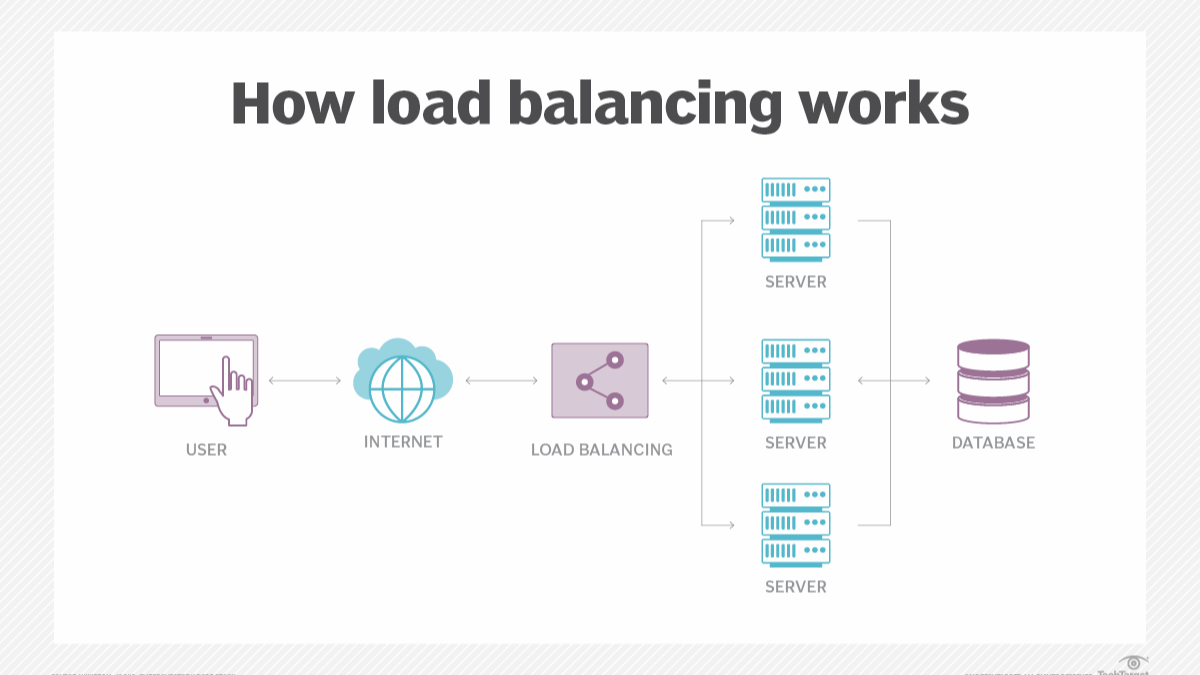

When I first heard the term Load Balancing, I imagined some giant machine splitting incoming requests like a traffic cop.

Turns out… that’s pretty accurate.

Before understanding load balancing, I always wondered:

- Why do some websites stay stable even with millions of users online?

- How do large platforms avoid downtime when a server crashes?

- Why doesn’t traffic overload a single machine?

The answer is simple: Load Balancers.

They distribute traffic efficiently, increase reliability, ensure high availability, and make modern distributed systems possible.

Let’s break down the core concepts.

My Old Understanding of Load Balancing

Earlier, I thought load balancing was only about “splitting traffic in two.”

Something like:

User → Server A User → Server B

I assumed it was just a round-robin traffic distributor.

No idea about health checks, algorithms, failover, or SSL termination.

Most beginners think the same because tutorials often jump into Nginx or HAProxy configs without explaining the why behind load balancing.

My New Understanding of Load Balancing

Once I deep-dived into DevOps, I realised load balancing is much more powerful and smarter than I thought.

A Load Balancer:

- Distributes incoming traffic across multiple servers.

- Detects unhealthy servers and stops sending requests to them.

- Improves application reliability.

- Helps scale horizontally (add more servers as traffic grows).

- Protects applications from failures by providing high availability.

Why is Load Balancing Important?

- Prevents overload on a single server.

- Enables zero-downtime deployments.

- Ensures consistent performance under heavy load.

- Keeps applications running even if one server fails.

It’s like having multiple cash counters in a mall.

One counter overloaded? Customers move to the next automatically.

Types of Load Balancers

1. Hardware Load Balancers

High-performance physical appliances used in enterprises.

Expensive but extremely reliable.

2. Software Load Balancers

The ones we commonly use in DevOps:

- Nginx

- HAProxy

- Envoy

- ** Traefik**

These run on standard servers or containers.

3. Cloud Load Balancers

Managed by cloud providers:

- AWS ELB / ALB

- Google Cloud Load Balancing

- Azure Load Balancer

They scale automatically and require almost no maintenance.

Load Balancing Algorithms

Load balancers use smart algorithms to distribute traffic:

1. Round Robin

Each server gets traffic in equal rotation.

2. Weighted Round Robin

Servers with higher capacity get more requests.

3. Least Connections

Send traffic to the server with the fewest active connections.

4. IP Hash

Same user always goes to the same server—useful for sessions.

5. Random

Self-explanatory but still effective in some scenarios.

Each algorithm solves a different problem, and modern LB systems can combine multiple.

Health Checks

One of the most underrated features.

Load balancers regularly ping servers using:

- HTTP checks

- TCP port checks

- HTTPS checks

If a server doesn’t respond or returns errors, the LB marks it unhealthy and removes it from rotation until it recovers.

Example: Load Balancing with Nginx

Here’s a simple load-balancing setup:

http {

upstream backend {

server 192.168.1.10;

server 192.168.1.11;

server 192.168.1.12;

}

server {

listen 80;

location / {

proxy_pass http://backend;

}

}

}This configuration distributes incoming traffic across three backend servers.

Real-World Usage

Load balancing is everywhere:

- Netflix uses it to handle millions of streaming requests.

- E-commerce apps use it during peak sales to avoid crashes.

- SaaS products rely on it for high availability.

- Kubernetes uses internal load balancing for pods and services. In short: no modern system can survive scale without load balancing.

Resources That Helped Me

- Nginx Load Balancing Docs – Clean and easy examples.

- HAProxy Official Documentation – Excellent for deeper learning.

- AWS ELB Documentation – Best explanation of cloud load balancing.

- TechWorld with Nana – Visual diagrams that make concepts simple.

Conclusion

Load balancing is one of those concepts that looks simple at first but is essential for building scalable, resilient systems. Once you understand how load balancers distribute traffic, handle health checks, and choose algorithms, the whole architecture of modern applications makes more sense.

In the next blogs, I’ll explore:

- Reverse proxies

- API gateways

- Service mesh vs load balancers

- Setting up load balancing in Kubernetes Load balancing isn’t just a networking feature—it’s the foundation of reliable infrastructure.

If you want, I can also create:

✅ A diagram-style Load Balancing Architecture

✅ Another blog on Reverse Proxy vs Load Balancer

✅ A deeper blog on Nginx Load Balancing (types + code)

Just tell me!